Planet ROS

Planet ROS - http://planet.ros.org

Planet ROS - http://planet.ros.org![]() http://planet.ros.org

http://planet.ros.org

ROS Discourse General: Proposal: Reproducible actuator-boundary safety (SSC + conformance harness)

Hi all — I’m looking for feedback on a design question around actuator-boundary safety in ROS-based systems.

Once a planner (or LLM-backed stack) can issue actuator commands, failures become motion. Most safety work in ROS focuses on higher layers (planning, perception, behavior trees), but there’s less shared infrastructure around deterministic enforcement at the actuator interface itself.

I’m prototyping a small hardware interposer plus a draft “Safety Contract” spec (SSC v1.1) with three components:

-

A machine-readable contract defining caps (velocity / acceleration / effort), modes (development vs field), and stop semantics

-

A conformance harness (including malformed traffic handling + fuzzing / anti-wedge tests)

-

“Evidence packs” (machine-readable logs with wedge counts, latency distributions, and verifier tooling)

The goal is narrow:

Not “this makes robots safe.”

But: if someone claims actuator-boundary enforcement works, there should be a reproducible way to test and audit that claim.

Some concrete design questions I’m unsure about:

• Does ROS 2 currently have a standard place where actuator-boundary invariants should live?

• Should this layer sit at the driver level, as a node wrapper, or outside ROS entirely?

• What would make a conformance harness credible to you?

• Are there prior art efforts I should be aware of?

I’m happy to share more technical detail if useful. Mostly interested in whether this layer is actually leverageful or if I’m solving the wrong problem.

1 post - 1 participant

ROS Discourse General: Ament (and cmake) understanding

I always add in existing CmakeLists new packages, but I just follow the previous structure of the code and adding something to it is not that hard, but it is always error and trial.

Reading official docs is of course useful, but it doesn’t stick. I forget it immediately.

Is this just a skill issue, and I should invest more time in Cmake and “building”? How important it is to know every line of a CmakeList? Does it make sense concentrating on it specifically?

Thank you)

2 posts - 2 participants

ROS Discourse General: Piper Arm Kinematics Implementation

Piper Arm Kinematics Implementation

Abstract

This chapter implements the forward kinematics (FK) and Jacobian-based inverse kinematics (IK) for the AgileX PIPER robotic arm using the Eigen linear algebra library, as well as the implementation of custom interactive markers via interactive_marker_utils.

Tags

Forward Kinematics, Jacobian-based Inverse Kinematics, RVIZ Simulation, Robotic Arm DH Parameters, Interactive Markers, AgileX PIPER

Function Demonstration

Code Repository

GitHub Link: https://github.com/agilexrobotics/Agilex-College.git

1. Preparations Before Use

Reference Videos:

1.1 Hardware Preparation

- AgileX Robotics Piper robotic arm

1.2 Software Environment Configuration

- For Piper arm driver deployment, refer to: https://github.com/agilexrobotics/piper_sdk/blob/1_0_0_beta/README(ZH).MD

- For Piper arm ROS control node deployment, refer to: https://github.com/agilexrobotics/piper_ros/blob/noetic/README.MD

- Install the Eigen linear algebra library:

sudo apt install libeigen3-dev

1.3 Prepare DH Parameters and Joint Limits for AgileX PIPER

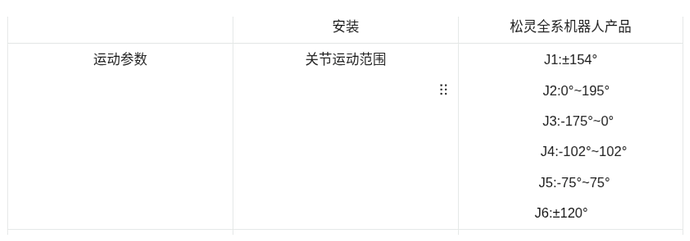

The modified DH parameter table and joint limits of the PIPER arm can be found in the AgileX PIPER user manual:

2. Forward Kinematics (FK) Calculation

The FK calculation process essentially converts angle values of each joint into the pose of a specific joint of the robotic arm in 3D space. This chapter takes joint6 (the last rotary joint of the arm) as an example.

2.1 Prepare DH Parameters

- Build the FK calculation program based on the PIPER DH parameter table. From the modified DH parameter table of AgileX PIPER in Section 1.3, we obtain:

// Modified DH parameters [alpha, a, d, theta_offset]

dh_params_ = {

{0, 0, 0.123, 0}, // Joint 1

{-M_PI/2, 0, 0, -172.22/180*M_PI}, // Joint 2

{0, 0.28503, 0, -102.78/180*M_PI}, // Joint 3

{M_PI/2, -0.021984, 0.25075, 0}, // Joint 4

{-M_PI/2, 0, 0, 0}, // Joint 5

{M_PI/2, 0, 0.091, 0} // Joint 6

};

For conversion to Standard DH parameters, refer to the following rules:

Standard DH ↔ Modified DH Conversion Rules:

-

Standard DH → Modified DH:

αᵢ₋₁ (Standard) = αᵢ (Modified)

aᵢ₋₁ (Standard) = aᵢ (Modified)

dᵢ (Standard) = dᵢ (Modified)

θᵢ (Standard) = θᵢ (Modified) -

Modified DH → Standard DH:

αᵢ (Standard) = αᵢ₊₁ (Modified)

aᵢ (Standard) = aᵢ₊₁ (Modified)

dᵢ (Standard) = dᵢ (Modified)

θᵢ (Standard) = θᵢ (Modified)

The converted Standard DH parameters are:

// Standard DH parameters [alpha, a, d, theta_offset]

dh_params_ = {

{-M_PI/2, 0, 0.123, 0}, // Joint 1

{0, 0.28503, 0, -172.22/180*M_PI}, // Joint 2

{M_PI/2, -0.021984, 0, -102.78/180*M_PI}, // Joint 3

{-M_PI/2, 0, 0.25075, 0}, // Joint 4

{M_PI/2, 0, 0, 0}, // Joint 5

{0, 0, 0.091, 0} // Joint 6

};

- Prepare DH Transformation Matrices

- Modified DH Transformation Matrix:

- Rewrite the modified DH transformation matrix using Eigen:

T << cos(theta), -sin(theta), 0, a,

sin(theta)*cos(alpha), cos(theta)*cos(alpha), -sin(alpha), -sin(alpha)*d,

sin(theta)*sin(alpha), cos(theta)*sin(alpha), cos(alpha), cos(alpha)*d,

0, 0, 0, 1;

- Standard DH Transformation Matrix:

- Rewrite the standard DH transformation matrix using Eigen:

T << cos(theta), -sin(theta)*cos(alpha), sin(theta)*sin(alpha), a*cos(theta),

sin(theta), cos(theta)*cos(alpha), -cos(theta)*sin(alpha), a*sin(theta),

0, sin(alpha), cos(alpha), d,

0, 0, 0, 1;

- Implement the core function

computeFK()for FK calculation. See the complete code in the repository: https://github.com/agilexrobotics/Agilex-College.git

Eigen::Matrix4d computeFK(const std::vector<double>& joint_values) {

// Check if the number of input joint values is sufficient (at least 6)

if (joint_values.size() < 6) {

throw std::runtime_error("Piper arm requires at least 6 joint values for FK");

}

// Initialize identity matrix as the initial transformation

Eigen::Matrix4d T = Eigen::Matrix4d::Identity();

// For each joint:

// Calculate actual joint angle = input value + offset

// Get fixed parameter d

// Calculate the transformation matrix of the current joint and accumulate to the total transformation

for (size_t i = 0; i < 6; ++i) {

double theta = joint_values[i] + dh_params_[i][3]; // θ = joint_value + θ_offset

double d = dh_params_[i][2]; // d = fixed d value (for rotary joints)

T *= computeTransform(

dh_params_[i][0], // alpha

dh_params_[i][1], // a

d, // d

theta // theta

);

}

// Return the final transformation matrix

return T;

}

2.2 Verify FK Calculation Accuracy

- Launch the FK verification program:

ros2 launch piper_kinematics test_fk.launch.py

- Launch the RVIZ simulation program, enable TF tree display, and check if the pose of

link6_from_fk(the arm end-effector calculated by FK) coincides with the originallink6(calculated by joint_state_publisher):

ros2 launch piper_description display_piper_with_joint_state_pub_gui.launch.py

High coincidence is observed, and the error between link6_from_fk and link6 is basically within four decimal places.

3. Inverse Kinematics (IK) Calculation

The IK calculation process essentially determines the position of each joint of the robotic arm required to move the arm’s end-effector to a given target point.

3.1 Confirm Joint Limits

- Joint limits of the PIPER arm must be defined to ensure the IK solution path does not exceed physical constraints (preventing arm damage or safety hazards).

- From Section 1.3, the joint limits of the PIPER arm are:

- The joint limit matrix is defined as:

std::vector<std::pair<double, double>> limits = {

{-154/180*M_PI, 154/180*M_PI}, // Joint 1

{0, 195/180*M_PI}, // Joint 2

{-175/180*M_PI, 0}, // Joint 3

{-102/180*M_PI, 102/180*M_PI}, // Joint 4

{-75/180*M_PI, 75/180*M_PI}, // Joint 5

{-120/180*M_PI, 120/180*M_PI} // Joint 6

};

3.2 Step-by-Step Implementation of Jacobian Matrix Method for IK

Solution Process:

- Calculate Error e:

Difference between current pose and target pose (6-dimensional vector: 3 for position + 3 for orientation). - Is Error e below tolerance?

- Yes: Return current θ as the solution.

- No: Proceed to iterative optimization.

- Calculate Jacobian Matrix J: 6×6 matrix.

- Calculate Damped Pseudoinverse:

J⁺ = Jᵀ(JJᵀ + λ²I)⁻¹

λ is the damping coefficient (avoids numerical instability in singular configurations).

5. Calculate Joint Angle Increment:

Δθ = J⁺e

Adjust joint angles using error e and pseudoinverse.

6. Update Joint Angles:

θ = θ + Δθ

Apply adjustment to current joint angles.

7. Apply Joint Limits.

8. Normalize Joint Angles.

9. Reach Maximum Iterations?

- No: Return to Step 2 for further iteration.

- Yes: Throw non-convergence error.

Core Function computeIK():

std::vector<double> computeIK(const std::vector<double>& initial_guess,

const Eigen::Matrix4d& target_pose,

bool verbose = false,

Eigen::VectorXd* final_error = nullptr) {

// Initialize with initial guess pose

if (initial_guess.size() < 6) {

throw std::runtime_error("Initial guess must have at least 6 joint values");

}

std::vector<double> joint_values = initial_guess;

Eigen::Matrix4d current_pose;

Eigen::VectorXd error(6);

bool success = false;

// Start iterative calculation

for (int iter = 0; iter < max_iterations_; ++iter) {

// Calculate FK for initial state to get position and orientation

current_pose = fk_.computeFK(joint_values);

// Calculate error between initial state and target pose

error = computePoseError(current_pose, target_pose);

if (verbose) {

std::cout << "Iteration " << iter << ": error norm = " << error.norm()

<< " (pos: " << error.head<3>().norm()

<< ", orient: " << error.tail<3>().norm() << ")\n";

}

// Check if error is below tolerance (separate for position and orientation)

if (error.head<3>().norm() < position_tolerance_ &&

error.tail<3>().norm() < orientation_tolerance_) {

success = true;

break;

}

// Calculate Jacobian matrix (analytical by default)

Eigen::MatrixXd J = use_analytical_jacobian_ ?

computeAnalyticalJacobian(joint_values, current_pose) :

computeNumericalJacobian(joint_values);

// Use Levenberg-Marquardt (damped least squares)

// Δθ = Jᵀ(JJᵀ + λ²I)⁻¹e

// θ_new = θ + Δθ

Eigen::MatrixXd Jt = J.transpose();

Eigen::MatrixXd JJt = J * Jt;

// lambda_: damping coefficient (default 0.1) to avoid numerical instability in singular configurations

JJt.diagonal().array() += lambda_ * lambda_;

Eigen::VectorXd delta_theta = Jt * JJt.ldlt().solve(error);

// Update joint angles

for (int i = 0; i < 6; ++i) {

// Apply adjustment to current joint angle

double new_value = joint_values[i] + delta_theta(i);

// Ensure updated θ is within physical joint limits

joint_values[i] = std::clamp(new_value, joint_limits_[i].first, joint_limits_[i].second);

}

// Normalize joint angles to [-π, π] (avoid unnecessary multi-turn rotation)

normalizeJointAngles(joint_values);

}

// Throw exception if no solution is found within max iterations (100)

if (!success) {

throw std::runtime_error("IK did not converge within maximum iterations");

}

// Calculate final error (if required)

if (final_error != nullptr) {

current_pose = fk_.computeFK(joint_values);

*final_error = computePoseError(current_pose, target_pose);

}

return joint_values;

}

3.3 Publish 3D Target Points for the Arm Using Interactive Markers

- Install ROS2 dependency packages:

sudo apt install ros-${ROS_DISTRO}-interactive-markers ros-${ROS_DISTRO}-tf2-ros

- Launch

interactive_marker_utilsto publish 3D target points:

ros2 launch interactive_marker_utils marker.launch.py

- Launch RVIZ2 to observe the marker:

- Drag the marker and use

ros2 topic echoto verify if the published target point updates:

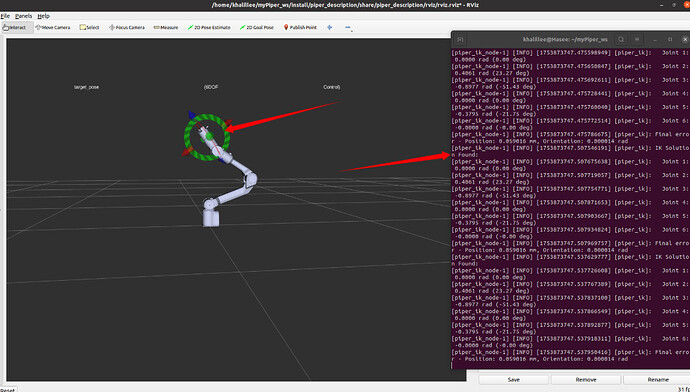

3.4 Verify IK Correctness in RVIZ via Interactive Markers

- Launch the AgileX PIPER RVIZ simulation demo (the model will not display correctly without

joint_state_publisher):

ros2 launch piper_description display_piper.launch.py

- Launch the IK node and

interactive_markernode (in the same launch file). The arm will display correctly after successful launch:

ros2 launch piper_kinematics piper_ik.launch.py

- Control the arm for IK calculation using

interactive_marker:

- Drag the

interactive_markerto see the IK solver calculate joint angles in real time:

- If the

interactive_markeris dragged to an unsolvable position, an exception will be thrown:

4. Verify IK on the Physical PIPER Arm

- First, launch the script for CAN communication with the PIPER arm:

cd piper_ros

./find_all_can_port.sh

./can_activate.sh

- Launch the physical PIPER control node:

ros2 launch piper my_start_single_piper_rviz.launch.py

- Launch the IK node and

interactive_markernode (in the same launch file). The arm will move to the HOME position:

ros2 launch piper_kinematics piper_ik.launch.py

- Drag the

interactive_markerand observe the movement of the physical PIPER arm.

1 post - 1 participant

ROS Discourse General: ROS2 news & updates wanted

Hi, I’m a robotics group admin with 412K members.

My group includes all kinds of robotics enthusiasts including some ROS enthusiasts.

I’d like to encourage you to post in my group. No strings attached. You get exposure worldwide.

Commercial robotics post are allowed - as long as they are not spammy.

facebook / groups/351994643955858

For your understanding, I don’t get paid for moderating the group. I just want to make it a better place for robotics enthusiasts.

Thank you for reading.

3 posts - 3 participants

ROS Discourse General: Building and Integrating AI Agents for Robotics — Experiences, Tools, and Best Practices

I’ve been exploring how AI agents are being developed and used to interact with robotic systems, especially within ROS and ROS 2 environments. There are some exciting projects in the community — from NASA JPL’s open‑source ROSA agent that lets you query and command ROS systems with natural language, to community efforts building AI agents for TurtleSim and TurtleBot3 using LangChain and other agent frameworks.

I’d love to start a discussion around AI agent design, implementation, and real‑world use in robotics:

-

Which AI agent frameworks have you experimented with for robotics?

For example, have you used ROSA, RAI, LangChain‑based agents, or custom solutions? What worked well and what limitations did you encounter? -

How do you handle multi‑modal inputs and outputs in your agents?

(e.g., combining natural language, sensor data, and robot commands) -

What strategies do you use for planning and action execution with your agent?

Do you integrate RL policies, behavior trees, skill libraries, or other reasoning approaches? -

What tooling or libraries do you recommend for scalable agent performance?

Have you found certain profiling tools, API integrations, or frameworks particularly helpful? -

What are the biggest challenges you’ve faced when deploying your AI agent on real robots?

(e.g., latency, safety, unexpected robot behavior, or integration issues) -

Are there any resources, examples, or papers that helped you with agent development?

I’m keen to share references and compare experiences.

Let’s share our experiences and recommendations — whether you’re just starting to explore AI agents or you’ve already built something that interacts with real robotic systems!

1 post - 1 participant

ROS Discourse General: What are the recommended learning resources for mastering ROS quickly?

What are the recommended learning resources for mastering ROS quickly?

4 posts - 4 participants

ROS Discourse General: 🔥 the ros2 competition is your ticket to the world of robotics, where job, university, and a real robot await!

Do you want to do more than just “play” with robots—do you want to become a sought-after specialist, invited to join teams, companies, and labs?

Then the ROS2 competition is your perfect start. Here’s why:

![]() It’s not just a game—it’s a real skill that employers value.

It’s not just a game—it’s a real skill that employers value.

ROS2 is the industry standard in robotics. It’s required for job openings from Sber’s Robotics Center to Boston Dynamics.

By participating in the competition, you’re not learning from a textbook—you’re solving a real problem: a robot must navigate autonomously, recognize objects, and manipulate them, just like in service and industrial robotics.

![]() Everything is within reach, even for a student

Everything is within reach, even for a student

You can build a robot for just 100–300 dollars.

Divide it among a team—it’s less than a gym membership.

And finding a sponsor for that amount? It’s easy—especially when you present a real project, not just an idea.

![]() You’ll have a working robot—not a toy, but a tool for future projects.

You’ll have a working robot—not a toy, but a tool for future projects.

After the competition, you’ll have a fully functional autonomous robot capable of:

- Navigating the room

- Grasping objects

- Working in the real world

This is your personal portfolio project, which will open doors to internships and research groups.

![]() Everything is already there—you’re not starting from scratch.

Everything is already there—you’re not starting from scratch.

Playing field? Just order a Charuco banner from any advertising agency—matte, inexpensive, ready to go.

Robot? There’s a baseline configuration on GitHub—a basic robot you can start with.

Training? A free, public course on ROS2 on Stepik with step-by-step instructions—everything from installation to running algorithms.

![]() You’ll learn more than just ROS2—you’ll master the entire engineering stack.

You’ll learn more than just ROS2—you’ll master the entire engineering stack.

- Linux

- Configuring local networks

- C++, Python

- Computer vision

- Path planning

- Manipulator control

And much more—all in one project!

![]() And starting in 2027, winning the ROS2 competition will earn you extra points when applying to universities and graduate schools!

And starting in 2027, winning the ROS2 competition will earn you extra points when applying to universities and graduate schools!

You’re not just participating—you’re investing in your future.

Today—training. Tomorrow—an advantage over other applicants.

![]() And yes—it’s fun!

And yes—it’s fun!

You’ll work in a team, solve puzzles, see how your code brings hardware to life…

It’s adrenaline, excitement, and pleasure—everything that made us fall in love with robotics in the first place. ![]() Don’t wait for the “perfect moment.” The perfect moment is now.

Don’t wait for the “perfect moment.” The perfect moment is now.

Register for the ROS2 competition—and in one months, you won’t be dreaming about a career in robotics…

You’ll already be there.

![]() Click “Register”—while others are thinking, you’re already building the future.

Click “Register”—while others are thinking, you’re already building the future.

![]() Get inspired by this song!

Get inspired by this song!

An article explaining the competition in detail.

Competition regulations and rules.

Video explanation of the competition regulations and rules.

We invite teams from all countries. Translation of the competition’s leading organizers into English is possible.

The competition is being held as part of the ROS Meetup conference on robotics and artificial intelligence in robotics, March 21-22, 2026, in Moscow.

We’d like to host an international ROS2 competition in Moscow every year. If you’d like to help us with this, please let us know.

1 post - 1 participant

ROS Discourse General: 🚀 Invitation to the scientific section at the ROS Meetup 2026 conference in Moscow. Remote participation is possible

CFP-ROSRM2026 - Eng.pdf (585.6 KB)

The Robot Operating System Research Meetup will be held for the first time in 2026 as part of the scientific track of the annual ROS Meetup. This is an international scientific forum dedicated to the discussion of artificial intelligence methods in robotics.

![]() Attention, scientists and researchers!

Attention, scientists and researchers!

![]() Registration for scientific papers is now OPEN! Papers can be submitted to the ROSRM 2026 scientific section, which is dedicated to artificial intelligence methods in robotics and will be held at the ROS Meetup conference on March 20-22, 2026, in Moscow. Don’t miss your chance to present your work and be published in a Scopus-listed journal!

Registration for scientific papers is now OPEN! Papers can be submitted to the ROSRM 2026 scientific section, which is dedicated to artificial intelligence methods in robotics and will be held at the ROS Meetup conference on March 20-22, 2026, in Moscow. Don’t miss your chance to present your work and be published in a Scopus-listed journal!

![]() Article Topics: Intelligent robotics, robotic algorithms, deep learning, reinforcement learning, agents in robotics, computer vision, navigation and control.

Article Topics: Intelligent robotics, robotic algorithms, deep learning, reinforcement learning, agents in robotics, computer vision, navigation and control.

![]() Submission Procedure:

Submission Procedure:

- Submit your abstract or full article before the ROS Meetup conference.

- If your abstract is accepted, you will present your paper in the scientific section at the conference, receive feedback, and receive recommendations for improvement. Address: Moscow Institute of Physics and Technology, Dolgoprudny, Russia, or remotely via videoconference.

- After the conference, revise your article based on the comments received and resubmit it.

- Receive publication of your article in the journal Optical Memory and Neural Networks (indexed in Scopus, WoS, Q3, and included in the White List of Journals)!

![]() Article Submission:

Article Submission:

Articles must be submitted through the service OpenReview. Details on preparing materials and the registration fee will be published on the conference website: rosmeetup.ru/science-eng

![]() Accepted articles will be published in the journal Optical Memory and Neural Networks, indexed in Scopus, ensuring your work is visible internationally! Publications also count toward master’s and doctoral programs.

Accepted articles will be published in the journal Optical Memory and Neural Networks, indexed in Scopus, ensuring your work is visible internationally! Publications also count toward master’s and doctoral programs.

![]() Any questions? Ask Dmitry Yudin.

Any questions? Ask Dmitry Yudin.

![]() Don’t miss the chance to advance your scientific career and get published in an international journal! Submit your article and join this important scientific event!

Don’t miss the chance to advance your scientific career and get published in an international journal! Submit your article and join this important scientific event!

![]() You can also provide a link to the source code of your ROS2 package (this is optional). This way, we support open source and the ROS philosophy of reusing software components across different robots!

You can also provide a link to the source code of your ROS2 package (this is optional). This way, we support open source and the ROS philosophy of reusing software components across different robots!

IMPORTANT DATES

March 9, 2026 — Abstract submission deadline

March 16, 2026 — Program committee decision on paper acceptance

March 19, 2026 — Participant registration

March 21–22, 2026 — Conference

April 20, 2026 — Full article submission deadline

May 25, 2026 — Notification of article acceptance

![]() Fill out the OpenReview submission form OpenReview. Right now, just the abstract is enough!

Fill out the OpenReview submission form OpenReview. Right now, just the abstract is enough!![]()

![]()

1 post - 1 participant

ROS Discourse General: NVIDIA Isaac ROS 4.1 for Thor has arrived

NVIDIA Isaac ROS 4.1 for Thor is now live.

NVIDIA Isaac ROS 4.1 is now available. This open-source collection of accelerated ROS 2 packages and reference applications adds more flexibility for building and deploying on Jetson AGX Thor.

This release introduces a Docker-optional development and deployment workflow, with new Virtual Environment and Bare Metal modes that make it easier to integrate Isaac ROS packages into your existing setup.

We’ve also made several key updates across the stack. Isaac ROS Nvblox now supports improved dynamics with LiDAR and motion compensation, and Isaac ROS Visual SLAM adds support for RGB-D cameras. There’s a new 3D-printable multi-camera rig for mounting RealSense cameras directly to Jetson AGX Thor, along with canonical URDF poses to get you started quickly.

On the sim-to-real side, a new gear assembly tutorial walks through training a reach policy in simulation and deploying it to a UR10e arm. And for data movement, you can now send and receive point clouds using the CUDA with NITROS API.

Check out the full details ![]() here and let us know what you build with 4.1

here and let us know what you build with 4.1 ![]()

1 post - 1 participant

ROS Discourse General: Henki ROS 2 Best Practices - For People and AI

Hi all!

We’ve decided to write down and publish some of our best practices for ROS 2 development at Henki Robotics! The list of best practices has been compiled from years of experience in developing ROS applications, and we wanted to make this advice freely available, as we believe that some of these simple tips can have a huge impact on a project architecture and maintainability.

In addition to having this advice available for developers, we built the repository so that the best practices can be directly integrated with coding agents to support modern AI-driven development. You can generate quality code automatically, or review your current project. We’ve tested this using Claude, and the difference in generated code is noticeable - we added examples in the repo to showcase the impact of these best practices.

More info in the repository. We’d love to hear which practices you find useful, and which ones we are still missing from our listing.

1 post - 1 participant

ROS Discourse General: Ouster Acquires StereoLabs Creating a World-Leading Physical AI Sensing and Perception Company

Ouster asserts its position in Physical AI by acquiring StereoLabs ![]()

2 posts - 2 participants

ROS Discourse General: Working prototype of native buffers / accelerated memory transport

Hello ROS community,

as promised in our previous discourse post, we have uploaded our current version of the accelerated memory transport prototype to GitHub, and it is available for testing.

Note on code quality and demo readiness

At this stage, we would consider this to be an early preview. The code is still somewhat rough around the edges, and still needs a thorough cleanup and review. However, all core pieces should be in place, and can be shown working together.

The current demo is an integration test that connects a publisher and a subscriber through Zenoh, and exchanges messages using a demo backend for the native buffers. It will show a detailed trace of the steps being taken.

The test at this point is not a visual demo, and it does not exercise CUDA, Torch or any other more sophisticated flows. We are working on a more integrated demo in parallel, and expect to add those shortly.

Also note that the structure is currently a proposal, detail of which will be discussed in the Accelerated Memory Transport Working Group, so some of the concepts may still change over time.

Getting started

In order to get started, we recommend installing Pixi first for an isolated and reproducible environment:

curl -fsSL https://pixi.sh/install.sh | sh

Then, clone the ros2 meta repo that contains the links to all modified repositories:

git clone https://github.com/nvcyc/ros2.git && cd ros2

Lastly, run the following command to setup the environment, clone the sources, build, and run the primary test to showcase functionality:

pixi run test test_rcl_buffer test_1pub_1sub_demo_to_demo

You can run pixi task list for additional commands available, or simply do pixi shell if you prefer to use colcon directly.

Details on changes

Overview

The rolling-native-buffer branch adds a proof-of-concept native buffer feature to ROS 2, allowing uint8[] message fields (e.g., image data) to be backed by vendor-specific memory (CPU, GPU, etc.) instead of always using std::vector. A new rcl_buffer::Buffer<T> type replaces std::vector for these fields while remaining backward-compatible. Buffer backends are discovered at runtime via pluginlib, and the serialization and middleware layers are extended so that when a publisher and subscriber share a common non-CPU backend, data can be transferred via a lightweight descriptor rather than copying through CPU memory. When backends are incompatible, the system gracefully falls back to standard CPU serialization.

Per package changes

rcl_buffer (new)

Core Buffer<T> container class — a drop-in std::vector<T> replacement backed by a polymorphic BufferImplBase<T> with CpuBufferImpl<T> as the default.

rcl_buffer_backend (new)

Abstract BufferBackend plugin interface that vendors implement to provide custom memory backends (descriptor creation, serialization registration, endpoint lifecycle hooks).

rcl_buffer_backend_registry (new)

Singleton registry using pluginlib to discover and load BufferBackend plugins at runtime.

demo_buffer_backend, demo_buffer, demo_buffer_backend_msgs (new)

A reference demo backend plugin with its buffer implementation and descriptor message, used for testing the plugin system end-to-end.

test_rcl_buffer (new)

Integration tests verifying buffer transfer for both CPU and demo backends.

rosidl_generator_cpp (modified)

Code generator now emits rcl_buffer::Buffer<uint8_t> instead of std::vector<uint8_t> for uint8[] fields.

rosidl_runtime_cpp (modified)

Added trait specializations for Buffer<T> and a dependency on rcl_buffer.

rosidl_typesupport_fastrtps_cpp (modified)

Extended the type support callbacks struct with has_buffer_fields flag and endpoint-aware serialize/deserialize function pointers.

Added buffer_serialization.hpp with global registries for backend descriptor operations and FastCDR serializers, plus template helpers for Buffer serialization.

Updated code generation templates to detect Buffer fields and emit endpoint-aware serialization code.

rmw_zenoh_cpp (modified)

Added buffer_backend_loader module to initialize/shutdown backends during RMW lifecycle.

Extended liveliness key-expressions to advertise each endpoint’s supported backends.

Added graph cache discovery callbacks so buffer-aware publishers and subscribers detect each other dynamically.

Buffer-aware publishers create per-subscriber Zenoh endpoints and check per-endpoint backend compatibility before serialization.

Buffer-aware subscribers create per-publisher Zenoh subscriptions and pass publisher endpoint info into deserialization for correct backend reconstruction.

Interpreting the log output

test_1pub_1sub_demo_to_demo produces detailed log outputs, highlighting the steps taken to allow following what is happening when a buffer flows through the native buffer infrastructure.

Below are the key points to watch out for, which also provide good starting points for more detailed exploration of the code.

Note that if you used the Pixi setup above, the code base will have compile_commands.json available everywhere, and code navigation is available seamlessly through your favorite LSP server.

Backend Initialization

Each ROS 2 process discovers and loads buffer backend plugins via pluginlib, then registers their FastCDR serializers.

Discovered 1 buffer backend plugin(s) / Loaded buffer backend plugin: demo

Demo buffer descriptor registered with FastCDR

Buffer-Aware Publisher Creation

The RMW detects at creation time that sensor_msgs::msg::Image contains Buffer fields, and registers a discovery callback to be notified when subscribers appear.

Creating publisher for topic '/test_image' ... has_buffer_fields: '1'

Registered subscriber discovery callback for publisher on topic: '/test_image'

Buffer-Aware Subscriber Creation

The subscription is created in buffer-aware mode — no Zenoh subscriber is created yet; it waits for publisher discovery to create per-publisher endpoints dynamically.

has_buffer_fields: 1, is_buffer_aware: 1

Initialized buffer-aware subscription ... (endpoints created dynamically)

Mutual Discovery

Both sides discover each other through liveliness key-expressions that include backends:demo:version=1.0, confirm backend compatibility, and create per-peer Zenoh endpoints.

Discovered endpoint supports 'demo' backend

Creating endpoint for key='...'

Buffer-Aware Publishing

The publisher serializes the buffer via the demo backend’s descriptor path instead of copying raw bytes, and routes to the per-subscriber endpoint.

Serializing buffer (backend: demo)

Descriptor created: size=192, data_hash=1406612371034480997

Buffer-Aware Deserialization

The subscriber uses endpoint-aware deserialization to reconstruct the buffer from the descriptor, restoring the demo backend implementation.

Deserialized backend_type: 'demo'

from_descriptor() called, size=192 elements, data_hash=1406612371034480997

Application-Level Validation

The subscriber confirms the data arrived through the demo backend path with correct content.

Received message using 'demo' backend - zero-copy path!

Image #1 validation: PASSED (backend: demo, size: 192)

What’s next

The code base will server as a baseline for discussions in the Accelerated Memory Transport Working Group, where the overall concept as well as its details will be discussed and agreed upon.

In parallel, we are working on integrating fully featured CUDA and Torch backends into the system, which will allow for more visually appealing demos, as well as a blueprint for how more realistic vendor backends would be implemented.

rclpy support is another high priority item to integrate, ideally allowing for seamless tensor exchange between C++ and Python nodes.

Lastly, since Zenoh will not become the default middleware for the ROS2 Lyrical release, we will restart efforts to integrate the backend infrastructure into Fast DDS.

2 posts - 2 participants

ROS Discourse General: Turning Davos into a Robot City this July

Hi all!

I am helping to organize the Davos Tech Summit July 1st-4th this year: https://davostechsummit.com/

Rather than keeping it as the typical fair or tech conference behind doors, we had the idea of turning Davos into a robot city. For this, we need the help of robotics companies to actually deploy their robots around the city, which we can help set up and coordinate. Some of the companies that have already confirmed they are joining the Robot City concept are:

- Ascento with their security robot

- Tethys robotics

- Deep robotics

- Loki Robotics

- Astral

Humanoid robots:

- Agibot - A2 + X2 - tasks TBD

- Droidup - task TBD

- Galbot G1 - doing pick and place at a shop

- Unitree - Various robots and tasks

- Booster Robotics - K1

- Limx Dynamics - Olli

- Devanthro

There are also ongoing talks with companies that are open to bring autonomous excavators, various inspection robots, a drone show, and setting up a location for people to pilot racing drones. Plus working on bringing autonomous cars and shuttles driving around the city.

We were in Davos during WEF to promote this event and got some media coverage: Davos Tech Summit 2026 | Touching Intelligence

If you are interested in speaking at the event, please reach out! We are building the program during this month.

We are also looking into organizing a ROS Meetup during the event.

Let us know if you’d like to join.

Cheers!

3 posts - 2 participants

ROS Discourse General: ROS 2 Rust Meeting: February 2026

The next ROS 2 Rust Meeting will be Mon, Feb 9, 2026 2:00 PM UTC

The meeting room will be at https://meet.google.com/rxr-pvcv-hmu

In the unlikely event that the room needs to change, we will update this thread with the new info!

1 post - 1 participant

ROS Discourse General: Canonical Observability Stack Tryout | Cloud Robotics WG Meeting 2026-02-11

Please come and join us for this coming meeting at Wed, Feb 11, 2026 4:00 PM UTC→Wed, Feb 11, 2026 5:00 PM UTC, where we plan to deploy an example Canonical Observability Stack instance based on information from the tutorials and documentation.

Last meeting, the CRWG invited Guillaume Beuzeboc from Canonical to present on the Canonical Observability Stack (COS). COS is a general observability stack for devices such as drones, robots, and IoT devices. It operates from telemetry data, and the COS team has extended it to support robot-specific use cases. If you’re interested to watch the talk, it is available on YouTube.

The meeting link for next meeting is here, and you can sign up to our calendar or our Google Group for meeting notifications or keep an eye on the Cloud Robotics Hub.

Hopefully we will see you there!

2 posts - 1 participant

ROS Discourse General: Is there / could there be a standard robot package structure?

Hi all! I imagine this might be one of those recurring noob questions that keep popping up every few months, please excuse my naivity..

I am currently working on a ROS 2 mobile robot (diff drive, with a main goal of easy hardware reconfigurability). Initial development took place as a tangled monolithic package, and we are now working on breaking it up into logically separate packages for: common files, simulation, physical robot implementation, navigation stack, example apps, hardware extensions, etc.

To my understanding, there is no official document that recommends a project structure for this, yet still, “established” robots (e.g. turtlebot4, UR, rosbot) seem to follow a similar convention among the lines of:

xyz_description– URDFs, meshes, visualsxyz_bringup– Launch and configuration for “real” physical implementationxyz_gazebo/_simulation– Launch and configuration for a simulated equivalent robotxyz_navigation– Navigation stack- …

None seem to be exactly the same, though. My understanding is that this is a rough convention that the community converged to over time, and not something well defined.

My question is thus twofold:

- Is there a standard for splitting up a robot’s codebase into packages, which I’m unaware of?

- If not, would there be any value in writing up such a recommendation?

Cheers!

3 posts - 3 participants

ROS Discourse General: [Release] GerdsenAI's Depth Anything 3 ROS2 Wrapper with Real-time TensorRT for Jetson

Update: TensorRT Optimization, 7x Performance Improvement Over Previous PyTorch Release!

Great news for everyone following this project! We’ve successfully implemented TensorRT 10.3 acceleration, and the results are significant:

Performance Improvement

| Metric | Before (PyTorch) | After (TensorRT) | Improvement |

|---|---|---|---|

| FPS | 6.35 | 43+ | 6.8x faster |

| Inference Time | 153ms | ~23ms | 6.6x faster |

| GPU Utilization | 35-69% | 85%+ | More efficient |

Test Platform: Jetson Orin NX 16GB (Seeed reComputer J4012), JetPack 6.2, TensorRT 10.3

Key Technical Achievement: Host-Container Split Architecture

We solved a significant Jetson deployment challenge - TensorRT Python bindings are broken in current Jetson container images (dusty-nv/jetson-containers#714). Our solution:

HOST (JetPack 6.x)

+--------------------------------------------------+

| TRT Inference Service (trt_inference_shm.py) |

| - TensorRT 10.3, ~15ms inference |

+--------------------------------------------------+

↑

| /dev/shm/da3 (shared memory, ~8ms IPC)

↓

+--------------------------------------------------+

| Docker Container (ROS2 Humble) |

| - Camera drivers, depth publisher |

+--------------------------------------------------+

This architecture enables real-time TensorRT inference while keeping ROS2 in a clean container environment.

One-Click Demo

git clone https://github.com/GerdsenAI/GerdsenAI-Depth-Anything-3-ROS2-Wrapper.git

cd GerdsenAI-Depth-Anything-3-ROS2-Wrapper

./run.sh

First run takes ~15-20 minutes (Docker build + TensorRT engine). Subsequent runs start in ~10 seconds.

Compared to Other Implementations

We’re aware of ika-rwth-aachen/ros2-depth-anything-v3-trt which achieves 50 FPS on desktop RTX 6000. Our focus is different:

- Embedded-first: Optimized for Jetson deployment challenges

- Container-friendly: Works around broken TRT bindings in Jetson images

- Production-ready: One-click deployment, auto-dependency installation

Call for Contributors

We’re looking for help with:

- Test coverage for SharedMemory/TensorRT code paths

- Validation on other Jetson platforms (AGX Orin, Orin Nano)

- Point cloud generation (currently depth-only)

Repo: GitHub - GerdsenAI/GerdsenAI-Depth-Anything-3-ROS2-Wrapper: ROS2 wrapper for Depth Anything 3 (https://github.com/ByteDance-Seed/Depth-Anything-3)

License: MIT

@Phocidae @AljazJus - the TensorRT optimization should help significantly with your projects! Let me know if you run into any issues.

1 post - 1 participant

ROS Discourse General: Getting started with Pixi and RoboStack

Hi all,

I noticed a lot of users get stuck with trying Pixi and RoboStack, simply because it’s to hard to do the initial setup.

To help you out we’ve created a little tool called pixi-ros to help you map the package.xml’s to a pixi.toml.

It basically does what rosdep does, but since the logic of the rosdep installation doesn’t translate well to a Pixi workspace this was always complex to implement.

Instead of staying in waiting mode untill we figured out a “clean” way of doing that I just wanted to get something out that helps you get started today.

Here is a small video to get you started:

Pixi ROS extension; think rosdep for Pixi!

I’m very open to contributions or improvement ideas!

ps. I hope pixi-ros will be obsolete ASAP due to our development of pixi-build-ros which can read package.xml’s directly, but today (5-feb-2026) I would only advice that workflow to advanced users due to the experimental nature of it.

1 post - 1 participant

ROS Discourse General: Accelerated Memory Transport Working Group Announcement

Hi

I’m pleased to announce that the Accelerated Memory Transport Working Group was officially approved by the ROS PMC on January 27th 2026.

This group will focus on extending the ROS transport utilities to enable better memory management through the pipeline to take advantage of available hardware accelerators efficiently, while providing fallbacks in cases where the whole system can not handle the advanced transport.

And may involve code in repositories including but not limited to:

- https://github.com/ros2/rosidl

- https://github.com/ros2/rclcpp

- https://github.com/ros2/rcl

- https://github.com/ros2/rmw

- https://github.com/ros2/rosidl_typesupport

- https://github.com/ros2/rcl_interfaces

The first meeting of the Accelerated Memory Transport Working Group will be on Wed, Feb 11, 2026 4:00 PM UTC → Wed, Feb 11, 2026 5:00 PM UTC

If you have any question about the process, please reach out to me: acordero@honurobotics.com

Thank you

2 posts - 2 participants

ROS Discourse General: 🚀 Update: LinkForge v1.2.1 is now live on Blender Extensions!

We just pushed a critical stability update for anyone importing complex robot models.

What’s New in v1.2.1?

![]() Fixed “Floating Parts”: We resolved a transform baking issue where imported meshes would drift from their parent links. Your imports are now 1:1 accurate.

Fixed “Floating Parts”: We resolved a transform baking issue where imported meshes would drift from their parent links. Your imports are now 1:1 accurate.

![]() Smarter XACRO: Use complex math expressions in your property definitions? We now parse mixed-type arguments robustly.

Smarter XACRO: Use complex math expressions in your property definitions? We now parse mixed-type arguments robustly.

![]() Implement native high-fidelity XACRO parser

Implement native high-fidelity XACRO parser

If you are building robots in Blender for ROS 2 or Gazebo, this is the most stable version yet.

![]() Get it on GitHub: linkforge-github

Get it on GitHub: linkforge-github

![]() Get it on Blender Extensions: linkforge-blender

Get it on Blender Extensions: linkforge-blender

1 post - 1 participant

ROS Discourse General: Lessons learned migrating directly to ROS 2 Kilted Kaiju with pixi

Hi everyone,

We recently completed our full migration from ROS 1 Noetic directly to ROS 2 Kilted Kaiju. We decided to skip the intermediate LTS releases (Humble/Jazzy) to land directly on the bleeding edge features and be prepared for the next LTS Lyrical in May 2026.

Some of you might have seen our initial LinkedIn post about the strategy, which was kindly picked up by OSRF. Since then, we’ve had time to document the actual execution.

You can see the full workflow (including a video of the “trash bin” migration ![]() ) in my follow-up post here:

) in my follow-up post here: ![]() Watch the Migration Workflow on LinkedIn

Watch the Migration Workflow on LinkedIn

I wanted to share the technical breakdown here on Discourse, specifically regarding our usage of Pixi, Executors, and the RMW.

1. The Environment Strategy: Pixi & Conda

We bypassed the system-level install entirely. Since we were already using Pixi and Conda for our legacy ROS 1 stack, we leveraged this to make the transition seamless.

- Side-by-Side Development: This allowed us to run ROS 1 Noetic and ROS 2 Kilted environments on the same machines without environment variable conflicts.

- The “Disposable” Workspace: We treated workspaces as ephemeral. We could wipe a folder, resolve, and install the full Kilted stack from scratch in <60 seconds (installing user-space dependencies only).

Pixi Gotchas:

- Versioning: We found we needed to remove the

pixi.lockfile when pulling the absolute latest build numbers (since we were re-publishing packages with increasing build numbers rapidly during the migration). - File Descriptors: On large workspaces, Pixi occasionally ran out of file descriptors during the install phase. A simple retry (or

ulimitbump) always resolved this.

2. Observability & AI

We relied heavily on Claude Code to handle the observability side of the migration. Instead of maintaining spreadsheets and bash scripts, we had Claude generate “throw-away” web dashboards to visualize:

- Build orders

- Real-time CI status

- Package porting progress

(See the initial LinkedIn post for examples of these dashboards)

3. The Workflow

Our development loop looked like this: Feature Branch → CI Success → Publish to Staging → pixi install (on Robot) → Test

Because we didn’t rely on baking Docker images for every test, the iteration loop (Code → Robot) was extremely fast.

4. Technical Pain Points & Findings

This is where we spent most of our debugging time:

Executors (Python Nodes):

SingleThreadedExecutor: Great for speed, but lacked the versatility we needed (e.g., relying on callbacks within callbacks for certain nodes).MultiThreadedExecutor: This is what we are running mostly now. We noticed some performance overhead, so we pushed high-frequency topic subscriptions (e.g.,tfandjoint_states) to C++ nodes to compensate.ExperimentalEventExecutor: We tried to implement this but couldn’t get it stable enough for production yet.

RMW Implementation:

- We started with the default FastRTPS but encountered stability and latency issues in our specific setup.

- We switched to CycloneDDS and saw an immediate improvement in stability.

Questions for the Community:

- Has anyone put the new Zenoh RMW through its paces in Kilted/Rolling yet? We are eyeing that as a potential next step.

- Are others testing Kilted in production contexts yet, or have you had better luck with the Event Executor stability?

Related discussion on tooling: Pixi as a co-official way of installing ROS on Linux

3 posts - 3 participants

ROS Discourse General: Stop rewriting legacy URDFs by hand 🛑

Migrating robots from ROS 1 to ROS 2 is usually a headache of XML editing and syntax checking.

In this video, I demonstrate how ğ��‹ğ��¢ğ��§ğ��¤ğ��…ğ��¨ğ��«ğ�� ğ��� solves this in minute using the ğ��’ğ���-ğ��€ğ��‘ğ��Œ100.

The Workflow:

![]() Import: Load legacy ROS 1 URDFs directly into Blender with LinkForge.

Import: Load legacy ROS 1 URDFs directly into Blender with LinkForge.

![]() Interact: Click links and joints to visualize properties instantly.

Interact: Click links and joints to visualize properties instantly.

![]() Modernize: Auto-generate ros2_control interfaces from existing joints with one click.

Modernize: Auto-generate ros2_control interfaces from existing joints with one click.

![]() Export: Output a clean, fully compliant ROS 2 URDF ready for Jazzy.

Export: Output a clean, fully compliant ROS 2 URDF ready for Jazzy.

LinkForge handles the inertia matrices, geometry offsets, and tag upgrades automatically.

3 posts - 2 participants

ROS Discourse General: Stable Distance Sensing for ROS-Based Platforms in Low-Visibility Environments

In nighttime, foggy conditions, or complex terrain environments, many ROS-based platforms

(UAVs, UGVs, or fixed installations) struggle with reliable distance perception when relying

solely on vision or illumination-dependent sensors.

In our recent projects, we’ve been focusing on stable, continuous distance sensing as a

foundational capability for:

- ground altitude estimation

- obstacle distance measurement

- terrain-aware navigation

Millimeter-wave radar has shown strong advantages in these scenarios due to its independence

from lighting conditions and robustness in fog, dust, or rain. We are currently working with

both 24GHz and 77GHz mmWave radar configurations, targeting:

- mid-to-long-range altitude sensing

- close-range, high-stability distance measurement

We’re interested in discussing with the ROS community:

-

How others integrate mmWave radar data into ROS (ROS1 / ROS2)

-

Message formats or filtering strategies for distance output

-

Fusion approaches with vision or IMU for terrain-following or obstacle detection

Any shared experience, references, or best practices would be greatly appreciated.

1 post - 1 participant

ROS Discourse General: developing an autonomous weeding robot for orchards using ROS2 Jazzy

I’m developing an autonomous weeding robot for orchards using ROS2 Jazzy. The robot needs to navigate tree rows and weed close to trunks (20cm safety margin).

My approach:

GPS (RTK ideally) for global path planning and navigation between rows

Visual-inertial SLAM for precision control when working near trees - GPS accuracy isn’t sufficient for safe 20cm clearances

Need robust sensor fusion to hand off between the two modes

The interesting challenge is transitioning smoothly between GPS-based navigation and VIO-based precision maneuvering as the robot approaches trees.

Questions:

What VIO SLAM packages work reliably with ROS2 Jazzy in outdoor agricultural settings?

How have others handled the handoff between GPS and visual odometry for hybrid localization?

Any recommendations for handling challenging visual conditions (varying sunlight, repetitive tree textures)?

Currently working in simulation - would love to hear from anyone who’s taken similar systems to hardware.

1 post - 1 participant

ROS Discourse General: Error reviewing Action Feedback messages in MCAP files

Hello,

We are using Kilted and record mcap bags with a command line approximately like this:

ros2 bag record -o <filename> --all-topics --all-services --all-actions --include-hidden-topics

When we open the MCAP files in Lichtblick or Foxglove we get this error in Problems panel and we can’t review the feedback messages:

Error in topic <redacted>/_action/feedback (channel 6)

Message encoding cdr with schema encoding '' is not supported (expected "ros2msg" or "ros2idl" or "omgidl")

At this point we are at a loss as to how to resolve this - do we need to publish the schema encoding somewhere?

Thanks.

3 posts - 2 participants